What Is Bingbot: Understanding Bing’s Web Crawler And Its Behavior

Web crawlers play a crucial role in the functioning of search engines by systematically browsing and indexing web pages. One such web crawler is Bingbot, which belongs to the search engine Bing. This article aims to provide an in-depth understanding of Bingbot and its behavior, allowing website owners and developers to optimize their sites for better visibility on Bing’s search results.

By comprehending how Bingbot operates, website owners can ensure that their content is effectively crawled and indexed by Bing’s search engine. Additionally, monitoring Bingbot’s activity can help identify any issues or errors that may arise during the crawling process.

This article will also discuss common issues and troubleshooting techniques to assist website owners in resolving any problems encountered with Bingbot. Overall, this article will serve as a comprehensive guide for understanding Bingbot and leveraging its capabilities to maximize the visibility of websites on Bing’s search engine.

Key Takeaways

- Bingbot is Bing’s web crawler responsible for visiting and indexing web pages.

- Bingbot follows links on web pages to discover new content and updates its index accordingly.

- Bingbot has advantages like efficient crawling and indexing, ability to follow links, and compliance with robots.txt rules.

- Bingbot also has limitations like limited crawling frequency for less popular sites and inability to execute JavaScript or crawl pages behind a login.

The Importance of Web Crawlers

The utilization of web crawlers in the online ecosystem is crucial as they enable the comprehensive indexing and retrieval of vast amounts of information, providing users with efficient access to valuable resources and fostering a sense of empowerment and convenience.

Web crawlers, also known as spiders or bots, are automated programs that systematically navigate the internet, exploring web pages and collecting data. By following links and analyzing website structures, web crawlers gather information which is then used by search engines to create indexes and provide relevant search results to users.

Web crawlers play a vital role in ensuring the accuracy and timeliness of search engine results, as they continuously update and refresh their indexes to reflect the ever-changing online landscape.

Without web crawlers, accessing and organizing the vast amount of information available on the internet would be an arduous and time-consuming task for both users and search engines alike.

What is Bingbot?

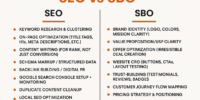

Bing’s web indexing system explores and analyzes web pages to gather information. One crucial component of this system is Bingbot, which is Bing’s web crawler. Bingbot is responsible for visiting and indexing web pages, allowing Bing to provide relevant search results to its users. Bingbot, like other web crawlers, follows links on web pages to discover new content and updates its index accordingly. It adheres to a set of rules defined by Bing, known as the robots.txt file, to determine which pages it can crawl and index. Bingbot’s behavior is guided by these rules, ensuring that it respects website owners’ preferences and operates within the bounds of web etiquette. By understanding Bingbot’s role and behavior, website owners can optimize their sites for better visibility and ranking on Bing’s search engine.

| Bingbot Advantages | Bingbot Limitations |

|---|---|

| Efficient crawling and indexing of web pages | Limited crawling frequency for less popular sites |

| Ability to follow links and discover new content | Inability to execute JavaScript on pages |

| Compliance with robots.txt rules | Inability to crawl pages behind a login |

Note: Bingbot’s behavior and capabilities may evolve over time, so it is essential to stay updated with the latest guidelines from Bing.

How Bingbot Operates

Operating in accordance with the rules set forth in the robots.txt file, the web indexing system known as Bingbot explores and analyzes web pages to efficiently crawl and index content while following links and discovering new information.

Bingbot operates by sending HTTP requests to web servers and retrieving the HTML content of web pages. It then extracts relevant information, such as text, images, and metadata, to understand the content and context of the page.

Bingbot also follows hyperlinks within the page to discover and crawl additional web pages. To ensure efficient crawling, Bingbot employs various techniques, such as prioritizing frequently updated pages and respecting crawl-delay directives.

It also adheres to webmaster guidelines to maintain a fair and ethical crawling process. Bingbot’s objective is to provide search engine users with up-to-date and relevant search results by effectively indexing the ever-growing web.

Bingbot’s Behavior

Bingbot’s behavior is shaped by its adherence to webmaster guidelines and its objective of providing search engine users with relevant and up-to-date search results. It follows a set of rules and protocols to crawl and index webpages, ensuring that the search results it delivers are accurate and reliable. Bingbot respects the robots.txt file, which allows website owners to control the crawling and indexing of their site. It also follows guidelines related to crawl rate, avoiding excessive requests to a website that could potentially overload the server. Moreover, Bingbot prioritizes fresh content, regularly revisiting websites to update its index with new information. This behavior ensures that users receive the most current and relevant search results.

| Behavior | Description |

|---|---|

| Adherence to guidelines | Bingbot follows webmaster guidelines to ensure fair and ethical crawling and indexing of websites. |

| Respect for robots.txt | Bingbot adheres to the instructions specified in the robots.txt file to respect website owner preferences. |

| Controlled crawl rate | Bingbot avoids overwhelming servers by adhering to crawl rate limits specified by website owners. |

| Prioritizes fresh content | Bingbot frequently revisits websites to update its index with the latest information. |

| Provides relevant search results | Bingbot’s behavior is geared towards delivering accurate and up-to-date search results to users. |

Optimizing Your Website for Bingbot

Optimizing a website involves implementing strategies to enhance its visibility and performance in search engine results, ensuring that it is effectively indexed by search engine crawlers such as Bingbot. To optimize your website for Bingbot, consider the following steps:

-

Ensure your website has a clear and well-structured navigation system, making it easy for Bingbot to crawl and understand the content.

-

Use descriptive and relevant meta tags, including title tags and meta descriptions, to provide accurate information about your web pages.

-

Create high-quality and unique content that is optimized with relevant keywords, as Bingbot values informative and original content.

By following these optimization techniques, Bingbot can easily navigate, index, and rank your website, increasing its visibility and attracting more organic traffic.

Remember to regularly monitor your website’s performance and make necessary adjustments to keep up with the evolving search engine algorithms.

Monitoring Bingbot’s Activity

One important aspect of maintaining an optimized website is to regularly monitor the activity of the search engine crawler, Bingbot, to ensure that it is effectively indexing and ranking your web pages. By monitoring Bingbot’s activity, website owners can gain valuable insights into how their site is being crawled and indexed by Bing’s search engine. This information can help identify any issues or errors that may be hindering the crawling and indexing process. Additionally, monitoring Bingbot’s activity allows website owners to track the frequency and timing of crawls, as well as the number of pages crawled per visit. This data can be used to optimize the website’s performance and ensure that it is being properly indexed by Bing.

| Insight | Actions |

|---|---|

| Identify any issues or errors | Fix any technical issues or errors that may be hindering the crawling and indexing process. |

| Track frequency and timing of crawls | Schedule content updates and website maintenance during periods of low crawl activity. |

| Monitor number of pages crawled per visit | Ensure that important pages are being crawled and indexed by Bing. |

| Ensure that any technical issues or errors that may be causing a low crawl rate are promptly resolved. | This will help Bing’s crawler to efficiently crawl and index all relevant pages on the website. |

Common Issues and Troubleshooting

In the previous subtopic, we explored the methods for monitoring Bingbot’s activity. Now, let us delve into common issues and troubleshooting techniques associated with Bingbot.

Despite its efficiency, Bingbot may encounter certain challenges during web crawling. One common issue is the improper indexing of web pages, resulting in pages not appearing in Bing search results. This can be rectified by ensuring that the website is structured correctly and that the content is easily accessible for crawling.

Another issue is excessive crawling, which can overload the server and negatively impact website performance. To address this, webmasters can set crawl rate limits using the Bing Webmaster Tools.

Additionally, Bingbot may face difficulties with websites that utilize JavaScript or AJAX technologies. In such cases, webmasters can provide static HTML versions of the pages to facilitate proper indexing by Bingbot.

Frequently Asked Questions

How does Bingbot determine the relevance of a webpage for search engine rankings?

Bingbot determines the relevance of a webpage for search engine rankings by evaluating various factors such as keyword usage, content quality, page structure, and user experience. These factors help determine the webpage’s relevance and its position in search engine results.

Can Bingbot crawl websites that require user authentication or have restricted access?

Bingbot can crawl websites that require user authentication or have restricted access. It does this by using a user agent string and following the guidelines set by the website owner.

Does Bingbot prioritize crawling frequently updated webpages over static ones?

Bingbot does not prioritize crawling frequently updated webpages over static ones. It does not discriminate based on the frequency of updates, as its primary goal is to provide relevant and comprehensive search results.

What is the frequency at which Bingbot revisits a webpage for recrawling?

The frequency at which Bingbot revisits a webpage for recrawling depends on various factors, including the importance and popularity of the webpage, the rate of content updates, and the server’s ability to handle crawl requests.

Are there any specific guidelines for optimizing images on a website for better Bingbot indexing?

Optimizing images on a website for better Bingbot indexing can be achieved by reducing image file size, using descriptive file names and alt text, ensuring proper image format, and providing relevant image captions and surrounding text.