Facebook A/B Testing Vs. Split Testing: Experimenting With Ad Variations For Optimization

In the realm of digital advertising, Facebook provides a fertile platform for marketers to engage with a broad spectrum of audiences. The effectiveness of these marketing campaigns often largely depends on how well the ads resonate with the target audience. To maximize this resonance and the subsequent return on investment, marketers employ testing methodologies such as A/B testing and Split testing. These strategies facilitate empirical decision-making by enabling the comparison of different ad variations based on performance metrics.

This article delves into the fundamental principles of A/B and Split testing, highlighting their differences and respective merits. Further, it provides a guide on the conduction of these tests on Facebook and discusses the interpretation and application of test results for optimization purposes. Understanding these testing techniques is essential for advertisers aiming to enhance campaign performance and fully harness the potential of Facebook’s vast user base.

Understand the Basics of A/B Testing

A/B testing, fundamentally, serves as a controlled experiment that compares two versions of a webpage or other user experience to determine which performs better.

It involves presenting two variations, A and B, to similar visitors simultaneously and then analyzing statistical data to discern which variation accomplished the objective more effectively.

This method is commonly employed in marketing to optimize web pages, emails, and online advertisements.

Though the two versions are typically identical barring one element, that minor alteration can significantly impact user interaction and engagement.

The modification could be as simple as changing a call-to-action button color or as complex as a complete webpage redesign.

Consequently, A/B testing is a critical tool for marketers to increase conversions and improve user experience.

Understand the Basics of Split Testing

Split testing, also known as A/B testing, is a method of conducting controlled, randomized experiments with the goal of improving a website metric, such as clicks, form completions, or purchases.

It operates by comparing two versions of a webpage (version A and version B) to see which performs better.

Through this process, businesses can make careful changes to their user experiences while collecting data on the results, enabling them to construct hypotheses, and to learn better why certain elements of their experiences impact user behavior.

Definition and Purpose

Exploring the concepts of Facebook A/B testing and split testing requires understanding their definition and purpose in the realm of advertising. A/B testing involves comparing two versions of an ad to determine which performs better. This can be measured by engagement, click-through rates, or conversions.

Split testing, on the other hand, is a method of comparing multiple variations of an ad set against each other, but with different audiences.

The purpose of these testing methods is fourfold:

-

To identify which ad variation elicits more favorable responses.

-

To optimize ad performance by improving conversion rates.

-

To gain insights into audience preferences and behavior.

-

To save resources by directing budget towards ads that perform better.

These purposes underscore the importance of experimentation in advertising optimization.

How it Works

Understanding the mechanics of these approaches involves a thorough examination of the processes and techniques involved.

In Facebook A/B testing, two or more variations of an ad are displayed to different segments of the audience. The performance of each ad variant is tracked and analyzed, with the objective of determining which variant drives the desired action most effectively.

On the contrary, split testing, also known as multivariate testing, involves the simultaneous comparison of multiple variables within an ad. This could include elements such as headline, image, call to action, or ad placement.

The data collected from these experiments then informs future advertising decisions, thereby optimizing the effectiveness of the ad campaign.

Both methods are valuable tools for refining advertising strategies, but their application depends on the specific objectives of the campaign.

Differences Between A/B and Split Testing

Distinguishing between A/B testing and split testing, though seemingly identical in their purpose of optimizing ad performance, is crucial due to their different methodologies and applications.

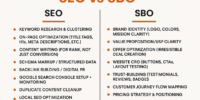

A/B testing involves comparing two versions of an ad to determine which performs better. It is usually conducted on one element at a time, such as headline, image, or call to action, to accurately ascertain the effect of each change.

Conversely, split testing, also known as multivariate testing, examines the impact of multiple variables at once. It divides the audience into multiple groups who are shown different ad combinations. The primary advantage of split testing is its ability to provide insights into how various elements interact with each other. However, it requires a larger audience to produce statistically significant results.

Benefits of A/B Testing

Transitioning from the distinguishing features of A/B and split testing, it is now pertinent to delve into the inherent advantages of A/B testing.

A/B testing provides an empirical basis for making decisions that can significantly enhance the efficiency of Facebook ads. By creating two different versions of an ad and randomly distributing them to two similar audience subsets, this method allows the determination of which ad performs better based on quantifiable data. This eliminates guesswork, reduces risks associated with decision-making, and engenders more effective ad strategies.

Furthermore, A/B testing paves the way for continuous improvement as it facilitates understanding of the elements that resonate with the audience. The knowledge gained can then be leveraged to refine and optimize future ad campaigns, thus maximizing return on investment.

Benefits of Split Testing

Delving into the realm of split testing reveals its potent benefits in enhancing the effectiveness of online marketing strategies.

Primarily, split testing is a form of experimentation that allows marketing strategists to analyze the performance of two different versions of an ad to determine which one resonates better with the audience. This approach saves both time and resources as it enables marketers to identify the most effective strategies without having to experiment with numerous variations.

Split testing offers a reliable method for determining the most effective ad variations, thus minimizing guesswork.

It provides an opportunity for businesses to understand their audience better by analyzing their response to different ad versions.

This method fosters creativity and innovation as businesses are encouraged to develop different and unique ad variations for testing.

Lastly, split testing can significantly enhance the return on investment (ROI) by optimizing ad performance.

How to Conduct an A/B Test on Facebook

Implementing a comparative analysis of two distinct advertising strategies on a popular social media platform necessitates a thorough understanding of the process involved in A/B testing.

This process involves the creation of two or more advertisements with a single variable difference for comparison. One must initially identify the variable to be tested, which could range from the ad’s headline, image, or body text.

After variable determination, separate but identical audience groups are targeted by these ads. The performance of each ad is then tracked and analyzed. The advertisement achieving the higher performance metrics, such as click-through rates or conversions, is deemed the more effective version.

This procedure provides a data-driven method for optimizing advertisement strategies, ensuring that marketing efforts yield the highest potential return.

How to Conduct a Split Test on Facebook

Conducting a comparative examination of two separate marketing strategies on a widely used social media platform requires a clear comprehension of the process involved in split testing. Facebook offers a versatile platform for conducting split tests, allowing marketers to compare the performance of two fundamentally different ad sets.

To conduct a split test on Facebook:

-

Create two distinct ad sets with unique characteristics. This could include different target audience, ad placements, or ad designs.

-

Ensure that each ad set receives equal exposure and has similar budget allocation.

-

Allow the test to run for a substantial period to collect adequate data for analysis.

-

Analyze the results to identify the ad set that performed better.

-

Apply the findings to optimize future marketing strategies on the platform.

Analyzing and Using Test Results for Optimization

Interpreting and leveraging the data gleaned from comparative marketing assessments can significantly enhance the effectiveness of future campaigns. It is crucial to carefully analyze the results from both A/B and split testing, identifying key performance parameters such as click-through rates, conversion rates, and the overall return on investment.

An in-depth analysis should be conducted to understand the specific elements contributing to the success or failure of certain ad variations. This information can be utilized to tweak and optimize future campaigns, ensuring they are better tailored to target audience preferences.

A continuous cycle of testing, analyzing, and refining is the key to effective marketing optimization. Thus, data from A/B and split tests on Facebook serve as valuable tools in the process of marketing optimization.

Frequently Asked Questions

What are some common mistakes to avoid when conducting A/B or split testing on Facebook?

Common errors in A/B or split testing include testing too many variables simultaneously, not allowing sufficient time for tests to reach statistical significance, and not considering external factors that might influence results.

Can A/B or Split testing be harmful for the brand’s image if it’s not done properly?

Improper implementation of A/B or split testing may indeed harm a brand’s image. Misinterpretation of data, constant changes to the user experience, or poorly designed tests could lead to user dissatisfaction and negative perceptions.

Are there any specific industries or types of businesses that benefit more from A/B or split testing?

A/B or split testing proves beneficial across various industries. Particularly, e-commerce, digital marketing, software development, and educational sectors often leverage these methods to optimize user experience and conversion rates.

How does Facebook’s A/B or split testing compare to other social media platforms?

Facebook’s A/B or split testing stands out due to its vast user base, which can provide more diverse testing results. However, the effectiveness of such testing methods may vary across different social media platforms.

How long should a business run an A/B or Split Test on Facebook to get reliable results?

The duration for running a reliable A/B or split test varies based on individual business goals and audience size. However, a minimum of one to two weeks is generally recommended to obtain statistically significant results.